X, Y, and Z are three random variables with mutual pairwise correlation rho. What is the minimum possible value that rho can take?

Definitions and concepts:

Before moving on to the actual question, let’s establish some base concepts and terminology. If you are comfortable with the notions of correlation, covariance, and positive semi-definite matrices, you can jump right ahead.

Definition 1: The correlation coefficient between two RVs, X and Y is equal to

![]()

Using the linearity of expectations and the standard deviation formula, this can be further expanded as:

![]()

As, from linearity, ![]() , we get:

, we get:

![]()

Property 1: The correlation coefficient takes values from -1 to 1

Proof:

Let X and Y be two random variables. We know that ![]() is also an RV for any

is also an RV for any ![]() , and, by definition, the variance of any RV is positive.

, and, by definition, the variance of any RV is positive.

We thus now have:

Looking now at RHS, as a quadratic function of ![]() that is non-negative, we can affirm that its discriminant is non-positive.

that is non-negative, we can affirm that its discriminant is non-positive.

![]()

Consequently,

![]()

If ![]() then X or Y must be a constant, thus

then X or Y must be a constant, thus ![]() ✅.

✅.

Otherwise, we can divide by it, and get:

![]()

We further simplify:

![]()

![Rendered by QuickLaTeX.com \[\left(\dfrac{\text{cov}(X,Y)}{\sqrt{\text{var}(X)}\sqrt{\text{var}(Y)}}\right)^2 \leq 1\]](https://atypicalquant.net/wp-content/ql-cache/quicklatex.com-d392a7d22886e51229dfce24d3b7ff01_l3.png)

We notice that we can replace LHS with corr, and get:

![]()

From this we get our final conclusion:

![]()

Definition 2: The covariance correlation matrix between two RVs, X, Y and Z is a square matrix giving the covariance/correlation between each pair of elements, i.e.:

![Rendered by QuickLaTeX.com \[\text{corr}([X,Y,Z]) = \begin{pmatrix} \text{corr}(X,X)&\text{corr}(X,Y)&\text{corr}(X,Z) \\ \text{corr}(Y,X)&\text{corr}(Y,Y)&\text{corr}(Y,Z) \\ \text{corr}(Z,X)&\text{corr}(Z,Y)&\text{corr}(Z,Z) \end{pmatrix}\]](https://atypicalquant.net/wp-content/ql-cache/quicklatex.com-f944e892ee1938484525434b04a8746f_l3.png)

Definition 3: A minor of a matrix is the determinant of some smaller square matrix

Definition 4: The leading principal minor of order k is the minor of order k obtained by deleting the last n-k rows and columns from the matrix

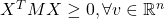

Definition 5: An  symmetric matrix M is said to be positive-semidefinite if

symmetric matrix M is said to be positive-semidefinite if  .

.

Property 2: A positive semi-definite matrix has all leading principal minors non-negative

Proof:

This proof is rather long and out of scope, but you can give it a read, for example, here: Sylvester’s Criterion (Math 484: Nonlinear Programming, University of Illinois, 2019). Do keep in mind that we only need the “![]() ” implication of the first bullet.

” implication of the first bullet.

Property 3: For any set of random variables, both their covariance matrix and covariance matrix are positive semi-definite

Proof:

We will use the same trick that we employed in our previous proof. Consider ![]() a set of random variables; then, we know that the variance of any weighted sum is non-negative, i.e.:

a set of random variables; then, we know that the variance of any weighted sum is non-negative, i.e.:

![Rendered by QuickLaTeX.com \[\text{var}\left( \displaystyle\sum_{i} c_iX_i \right) \geq 0 , \forall c_i \in \mathbb{R}\]](https://atypicalquant.net/wp-content/ql-cache/quicklatex.com-9056849c24817c85bd0a2ae822569d41_l3.png)

Thus:

![]()

Denoting ![]() and

and ![]() , we get that

, we get that

![]()

.

Similarly, denoting ![]() and

and ![]() , we get that:

, we get that:

![]()

.

By Definition 5, (1) and (2) imply that the covariance matrix, respectively the correlation matrix are positive semi-definite✅.

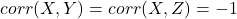

Property 4: If  then

then  .

.

Proof:

The proof of this property is a direct consequence of the previous one. Let ![]() , and write the correlation matrix of the 3 random variables:

, and write the correlation matrix of the 3 random variables:

![Rendered by QuickLaTeX.com \[\text{corr}([X,Y,Z]) = \begin{pmatrix} \text{corr}(X,X) & \text{corr}(X,Y) & \text{corr}(X,Z)\\ \text{corr}(Y,X) & \text{corr}(Y,Y) & \text{corr}(Y,Z)\\ \text{corr}(Z,X) & \text{corr}(Z,Y) & \text{corr}(Z,Z) \end{pmatrix} = \begin{pmatrix} 1 & -1 & -1 \\ -1 & 1 & rho \\ -1 & \rho & 1 \end{pmatrix}\]](https://atypicalquant.net/wp-content/ql-cache/quicklatex.com-88df57d30463e45a4e7c8cf26c087565_l3.png)

The determinant of this matrix is ![]() since the matrix is positive semi-definite.

since the matrix is positive semi-definite.

![]()

Solutions:

Particular case: 3 RVs

When asked to give a minimum value, two things must be done. Find the lower bound and then prove that this bound is attainable, by providing an example.

From Property 2, we know that the loosest bounds for ![]() are -1 and 1.

are -1 and 1.

We can easily see that ![]() can’t be equal to -1. If the pairs

can’t be equal to -1. If the pairs ![]() and

and ![]() have a correlation of -1, the correlation between X and Z must be 1.

have a correlation of -1, the correlation between X and Z must be 1.

We could also choose X, Y, and Z independent, in which case ![]() would be 0.

would be 0.

So, our minimal value is in the interval ![]() .

.

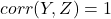

To get a tighter inequality, we use the necessary properties of the correlation matrix, outlined in the first part of the video. Write the correlation matrix of X, Y, and Z and set the condition for it to be positive-semi-definite. Recursively eliminate trailing rows and columns to get the principal leading minors.

![Rendered by QuickLaTeX.com \[\text{corr}([X,Y,Z]) = \begin{pmatrix} 1 & \rho & \rho \\ \rho & 1 & \rho \\ 1 & 1 & \rho \end{pmatrix}\]](https://atypicalquant.net/wp-content/ql-cache/quicklatex.com-772d445c83d5efa7ca18777fe0c3a9f7_l3.png)

![]() ✅

✅

![]() (by Property 2) ✅

(by Property 2) ✅

(To get to this, use, for example, the Rule of Sarrus + Factorize)

(To get to this, use, for example, the Rule of Sarrus + Factorize)

Now we have reduced the interval for ![]() , and the minimal value that

, and the minimal value that ![]() can take is at least

can take is at least ![]() . If we find a triplet of random variables with pairwise correlations of

. If we find a triplet of random variables with pairwise correlations of ![]() , we have proved that this value is attainable, hence the minimum. Unfortunately, this is the tricky part. One way to construct such variables is:

, we have proved that this value is attainable, hence the minimum. Unfortunately, this is the tricky part. One way to construct such variables is:

Let ![]() be independent identically distributed standard uniform random variables and consider

be independent identically distributed standard uniform random variables and consider ![]() . If we expand the average and compute the coefficients, we get a simplified formula for the

. If we expand the average and compute the coefficients, we get a simplified formula for the ![]() ‘s:

‘s:

![]()

We compute the variance of the ![]() ‘s by using the formula for the linear combination of independent random variables (in our case the

‘s by using the formula for the linear combination of independent random variables (in our case the ![]() s). The variance of

s). The variance of ![]() is equal to 1 and the covariance of

is equal to 1 and the covariance of ![]() and

and ![]() , with

, with ![]() is 0 as they are independent:

is 0 as they are independent:

![Rendered by QuickLaTeX.com \[\text{var}(X_i) = \text{var}\left( \dfrac{2}{3}A_i - \dfrac{1}{3}\sum_{j \neq i} A_j \right) = \dfrac{4}{9} \text{var}(A_i) + \sum_{j \neq i} \left( - \dfrac{1}{3}\right)^2 var(A_j) + \sum_{j \neq i} C \cdot \text{cov}(A_i,A_j)\]](https://atypicalquant.net/wp-content/ql-cache/quicklatex.com-b3cbcdc6afd536975e4419f3db3c96a9_l3.png)

![]()

Thinking back to the correlation formula, we are missing the covariance between ![]() and

and ![]() . We again linearly expand the covariance, keeping in mind that the covariance of independent random variables is 0, and the covariance of a random variable with itself is its variance.

. We again linearly expand the covariance, keeping in mind that the covariance of independent random variables is 0, and the covariance of a random variable with itself is its variance.

![Rendered by QuickLaTeX.com \[\text{cov}(X_i, X_j) = \text{cov} \left(\dfrac{2}{3}A_i - \dfrac{1}{3} \sum_{j\neq i} A_j ,\dfrac{2}{3}A_k - \dfrac{1}{3} \sum_{j\neq k} A_j \right)\]](https://atypicalquant.net/wp-content/ql-cache/quicklatex.com-42dfaeefaf84e702d0bcd01ee17e70c8_l3.png)

![]()

![]()

Thus,

![Rendered by QuickLaTeX.com \[\text{corr}(X_i, X_k) = \dfrac{cov(X_i,X_k)}{\sqrt{\text{var}(X_i)\text{var}(X_k)}} = \dfrac{-\frac{1}{3}}{\frac{2}{3}} = - \dfrac{1}{2}, \forall i,k \in {1,2,3}, i\neq k\]](https://atypicalquant.net/wp-content/ql-cache/quicklatex.com-2b55936f4f673c920ece66af8de85d71_l3.png)

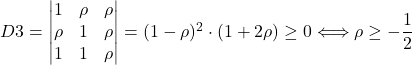

For this construction, all the pairwise correlations are equal to ![]() . Thus, we’ve obtained the minimum possible value for

. Thus, we’ve obtained the minimum possible value for ![]() . ✅

. ✅

General Case

We have the correct result for the case of 3 random variables. Can we generalize it? How about the minimum value of ![]() when you have n random variables with pairwise correlations equal?

when you have n random variables with pairwise correlations equal?

Just as before, we consider the correlation matrix and its properties.

![Rendered by QuickLaTeX.com \[\text{corr}([X_1,X_2,...,X_n]) = \begin{pmatrix} 1 & \rho & \cdots & \rho \\ \rho & 1 & \cdots & \rho \\ \vdots & \vdots & \ddots & \vdots \\ \rho & \rho & \cdots & 1 \end{pmatrix}\]](https://atypicalquant.net/wp-content/ql-cache/quicklatex.com-8a1bf0858ecff61b30660485e14abeb0_l3.png)

Like before, its determinant must be at least 0. Computing it is not as trivial, since there is no generalized formula for the determinant of an ![]() matrix. However, we can use the decomposition along a column and induction to prove that its value is:

matrix. However, we can use the decomposition along a column and induction to prove that its value is:

![]()

For this to be greater than or equal to 0, we must have that:

![]()

.

We again construct the random variables ![]() as the difference between

as the difference between ![]() and the mean of the

and the mean of the ![]() s, where

s, where ![]() are iid standard uniform. With similar rationing as in the previous part, we compute the variance of

are iid standard uniform. With similar rationing as in the previous part, we compute the variance of ![]() and get

and get ![]() . The covariance of

. The covariance of ![]() and

and ![]() turns out to be

turns out to be ![]() . From the correlation formula, we obtain the correlation between any distinct

. From the correlation formula, we obtain the correlation between any distinct ![]() and

and ![]() to be

to be ![]() , just the lower bound we observed above.

, just the lower bound we observed above.

This generalization is consistent with our result for n equals three. At the same time, we can see that the value of the minimal correlation converges to 0 when n goes to infinity. Our expectation that adding more random variables makes it impossible to have their correlation small, is supported by this convergence.